My last post showed how to apply a classic software integration pattern to the new world of AI Engineering. This post shows how to improve the Router’s ability to make the right call. You can get the companion notebook for this post on my GitHub.

I was inspired by three very useful cookbooks on OpenAI Evals by Josiah Grace(OpenAI) but really it’s a post for me to keep track of my learnings. I found OpenAI Evals tricky to wrap my head around. There’s not a ton of technical documentation, LLMs aren’t good at writing Eval code, OpenAI offers different tools, and the SDK is just plain tough to follow.

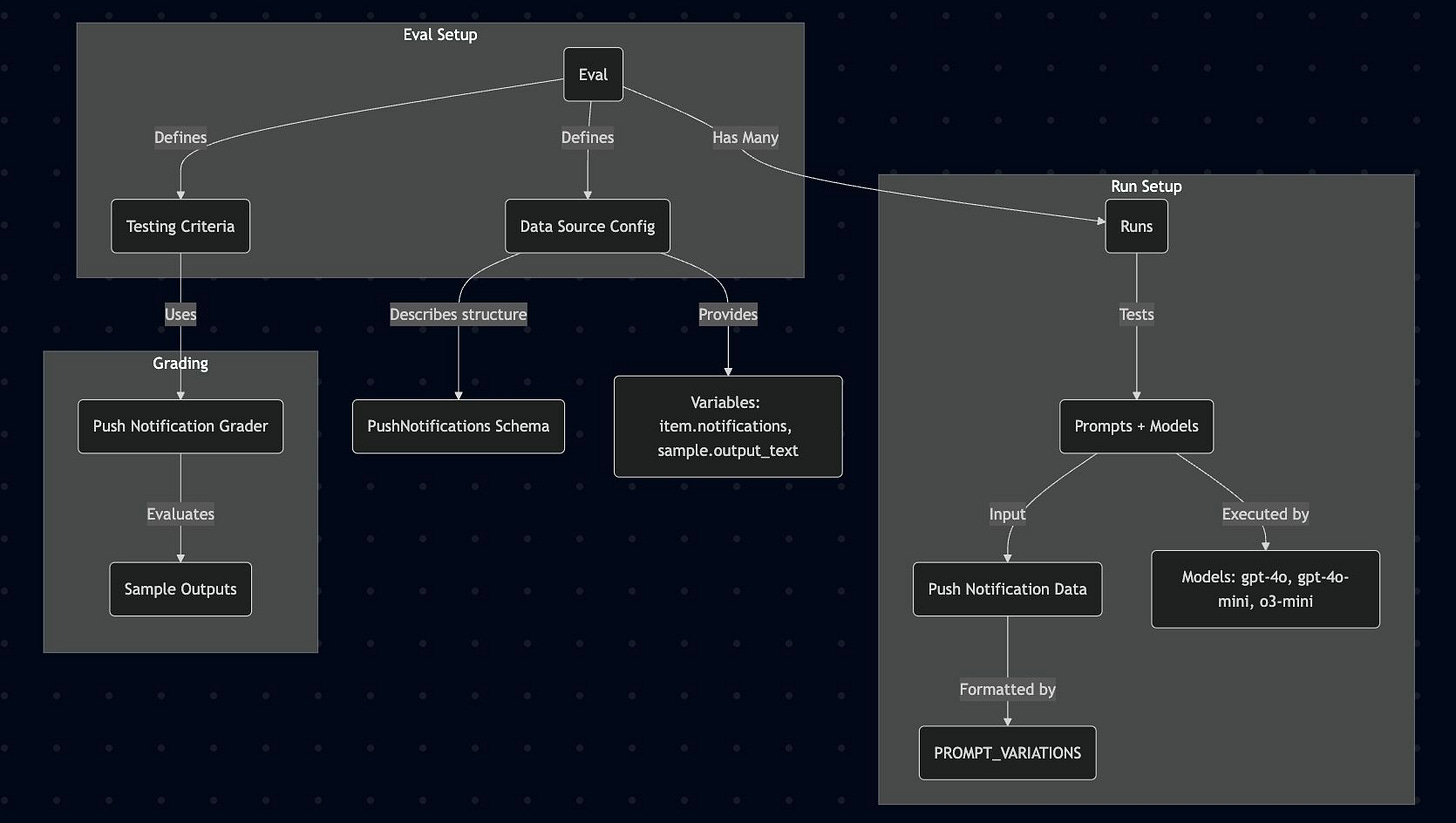

Quick Visual of Evals

ChatGPT is a great tool to help understand code by visualizing it. I uploaded Josiah’s notebook from his Bulk Prompt Experimentation post and asked it to create a Mermaid-compatible diagram. Take a minute to digest this.

Use Case: Routing Budget Requests with LLMs

I’ve been experimenting making drudge-work more fun by gamifying it. My current project is an app that helps enterprise departments (e.g. IT, HR, Facilities, etc) create high quality annual budgets. It’s a jumpscare/escape game a la Five Nights at Freddy’s where the evil Finance department is fed up with your delays - and they’re coming for you.

In the game, you’re chatting with an AI friend who helps with web research, adds items to your budget, and compiles everything into a spreadsheet you can download. It’s kind of ridiculous but makes me laugh so I’m sticking with it. Maybe I’ll release it as a product. Maybe not. Either way, it’s a great project for learning.

You can see some of the gameplay here.

A tricky part of building this app is making the LLM figure out what to do based on what the user asks for with high accuracy.

For example, a user might say:

"Create a budget item for 10 Mac M1s. They cost $3,500 each."

The system should recognize that this requires structured data and route it to the structured_response handler so that it can add a line item to the budget. But other requests might need a chat (chat_response) or a data export (download_file).

Generally speaking, proper routing is critical to the user experience — if the LLM gets it wrong the user will be confused, at best, or dismiss the app as “broken” and never use it again. The stakes are much higher with something you rely on, like an Agent for healthcare decisions.

From the developer perspective, messing around with a couple of different prompts until you find one that feels good is a bad idea. A better one is to test a variety of prompts and models over a large corpus of data to get some real numbers.

Evals for Better Get Done

Evals are task-oriented and iterative, they're the best way to check how your LLM integration is doing and improve it.

In the following eval, we focus on the task of picking the model-prompt combination that provides the best performance, quality, and cost for our use-case:

We've created an LLM-based Router for our budget chatbot. The router's job is to triage user requests and route them to the best component to meet the objective. Components can be other AI functions or native function calls that don't require AI.

We have a predetermined set of possible routes the model can choose from.

We have created a dataset of user inputs and the ideal response for comparison.

We want to see how detailed our prompt needs to be to achieve a high percentage of matches between the model output and the ideal response as labeled in our data file.

Data

First, I made a file with 100 records. Each record has an input showing what the user might ask and the ideal response. For example:

{"item": {"input": "Create a budget item for 10 Mac M1s. They cost $3500 each.", "ideal": "structured_response"}}

{"item": {"input": "Save it!", "ideal": "download_file"}}

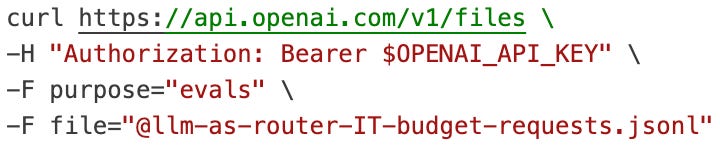

{"item": {"input": "What are some IT helpdesk MSPs in the greater Boston area?", "ideal": "chat_response"}}Next, upload the file to OpenAI.

This gives a file id that I assign to the run later

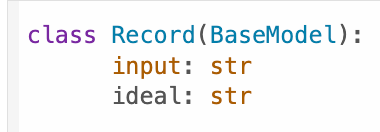

In the notebook I have a class that makes it easy to handle the data in the Eval. (Note that "item" seems to be an internal key used by OpenAI Evals so we don't need to include it in our Class.)

This structure lets me reference record data like so, {{item.ideal}}

Setting up your eval

An Eval holds the configuration that is shared across multiple Runs, it has two components:

Data source configuration

data_source_config- the schema (columns) that your future Runs conform to.Our data_source_config uses the

Record.model_json_schema()function to define what variables are available in the Eval.

Testing Criteria

testing_criteria- How you'll determine if your integration is working for each record.

Create Eval

Creating an Eval means registering it on OpenAI's platform. It acts as a template so you can run it many times with different datasets, duplicate it, etc.

We create it here and store the result so we can reference the Eval's ID later on.

Create Prompts of Increasing Specificity

We start with a generic prompt and build on it (partial view)

Models

This is where we define the list of models to try.

models = ["gpt-4o-mini", "gpt-4.1-mini", "gpt-4.1-nano"]

Creating Runs

Our Eval, prompts, and models are set so now we can create Runs. To do this, we make a nested loop so every prompt is tested against every model.

The input_messages template shows where we're passing in the prompt as a parameter and we're also passing in the {{item.input}} from data set.

You may notice that we're not looping over our dataset in the code. This is because the eval engine does that for us. As long as we tell eval how to access the data (done here via the file_id), we're good to go.

View Results

Lastly, the notebook has some code to plot the results of our runs. You can get the same thing from OpenAI’s Evals dashboard but why not save an alt-tab by charting it here?

We see that score correlates with the prompt more so than the model, though both are important.

Conclusion

Based on these results, I’m going with the most elaborate prompt and with 4o-mini ($0.15/million tokens) rather than 4.1-mini ($0.40/million tokens).